Playing around with Tensorflow and Deepdream.py, here are the results.

This is the original picture

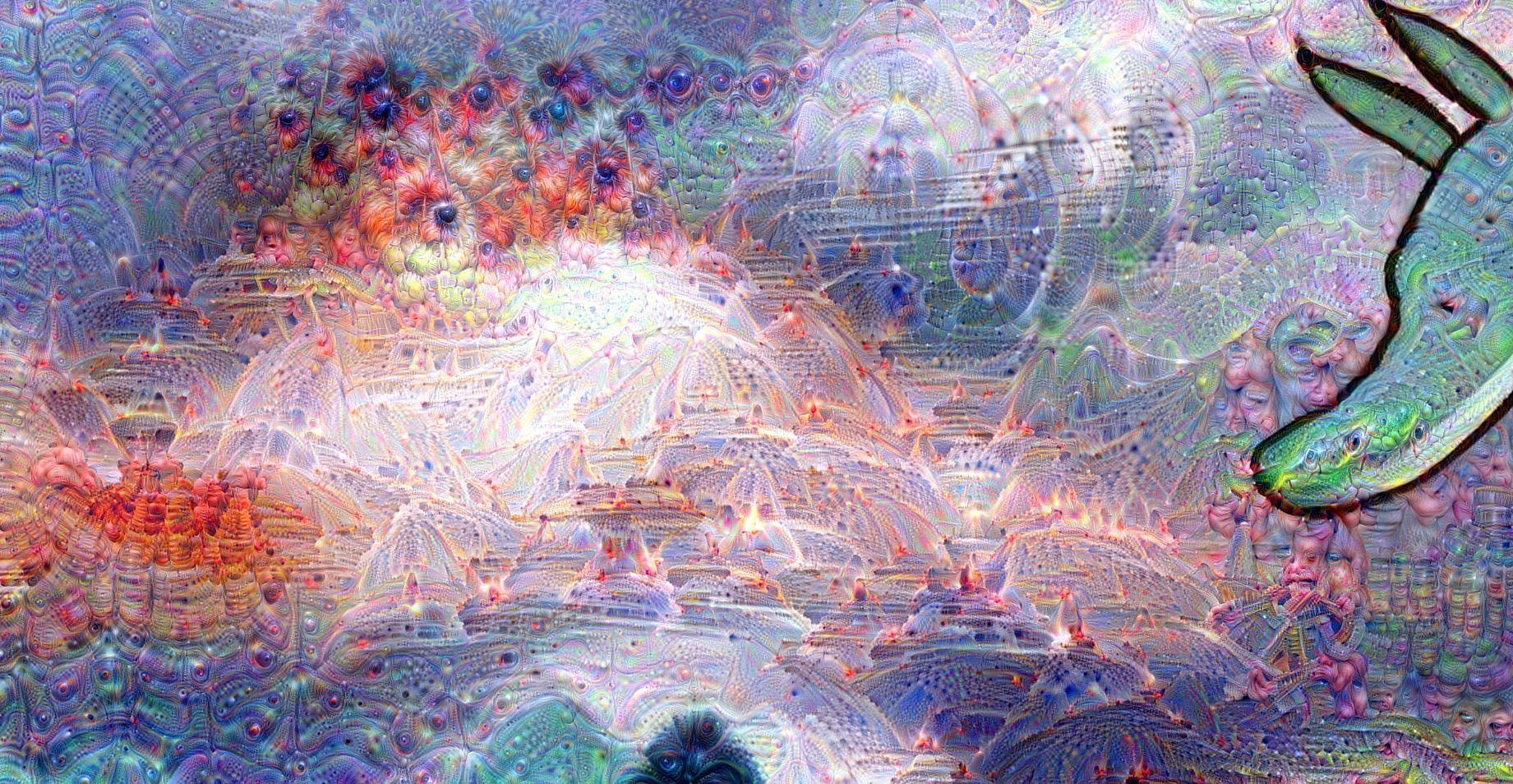

and this is a zoom of the picture after 41 iterations and using the mixed4d_3x3_bottleneck_pre_relu layer

Here is the script I used and the tensorflow_inception_graph.pb is avaialble at Tensorflow

import numpy as np

from functools import partial

import PIL.Image

import tensorflow as tf

import matplotlib.pyplot as plt

import urllib2

import os

import zipfile

from random import seed

from random import randint

import time

def main():

# start with a gray image with a little noise

img_noise = np.random.uniform(size=(224,224,3)) + 100.0

model_fn = 'tensorflow_inception_graph.pb'

# creating TensorFlow session and loading the model

graph = tf.Graph()

sess = tf.InteractiveSession(graph=graph)

with tf.gfile.FastGFile(model_fn, 'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

t_input = tf.placeholder(np.float32, name='input') # define the input tensor

imagenet_mean = 117.0

t_preprocessed = tf.expand_dims(t_input-imagenet_mean, 0)

tf.import_graph_def(graph_def, {'input':t_preprocessed})

layers = [op.name for op in graph.get_operations() if op.type=='Conv2D' and 'import/' in op.name]

feature_nums = [int(graph.get_tensor_by_name(name+':0').get_shape()[-1]) for name in layers]

print('Number of layers', len(layers))

print('Total number of feature channels:', sum(feature_nums))

# Helper functions for TF Graph visualization

#pylint: disable=unused-variable

def strip_consts(graph_def, max_const_size=32):

"""Strip large constant values from graph_def."""

strip_def = tf.GraphDef()

for n0 in graph_def.node:

n = strip_def.node.add() #pylint: disable=maybe-no-member

n.MergeFrom(n0)

if n.op == 'Const':

tensor = n.attr['value'].tensor

size = len(tensor.tensor_content)

if size > max_const_size:

tensor.tensor_content = "<stripped %d bytes>"%size

return strip_def

def rename_nodes(graph_def, rename_func):

res_def = tf.GraphDef()

for n0 in graph_def.node:

n = res_def.node.add() #pylint: disable=maybe-no-member

n.MergeFrom(n0)

n.name = rename_func(n.name)

for i, s in enumerate(n.input):

n.input[i] = rename_func(s) if s[0]!='^' else '^'+rename_func(s[1:])

return res_def

def showarray(a):

a = np.uint8(np.clip(a, 0, 1)*255)

result = PIL.Image.fromarray(a, mode='RGB')

timestr = time.strftime("%Y%m%d-%H%M%S")

result.save('dream/img_{}.jpg'.format(timestr))

def visstd(a, s=0.1):

'''Normalize the image range for visualization'''

return (a-a.mean())/max(a.std(), 1e-4)*s + 0.5

def T(layer):

'''Helper for getting layer output tensor'''

return graph.get_tensor_by_name("import/%s:0"%layer)

def render_naive(t_obj, img0=img_noise, iter_n=20, step=1.0):

t_score = tf.reduce_mean(t_obj) # defining the optimization objective

t_grad = tf.gradients(t_score, t_input)[0] # behold the power of automatic differentiation!

img = img0.copy()

for _ in range(iter_n):

g, _ = sess.run([t_grad, t_score], {t_input:img})

# normalizing the gradient, so the same step size should work

g /= g.std()+1e-8 # for different layers and networks

img += g*step

showarray(visstd(img))

def tffunc(*argtypes):

'''Helper that transforms TF-graph generating function into a regular one.

See "resize" function below.

'''

placeholders = list(map(tf.placeholder, argtypes))

def wrap(f):

out = f(*placeholders)

def wrapper(*args, **kw):

return out.eval(dict(zip(placeholders, args)), session=kw.get('session'))

return wrapper

return wrap

# Helper function that uses TF to resize an image

def resize(img, size):

img = tf.expand_dims(img, 0)

return tf.image.resize_bilinear(img, size)[0,:,:,:]

resize = tffunc(np.float32, np.int32)(resize)

def calc_grad_tiled(img, t_grad, tile_size=512):

'''Compute the value of tensor t_grad over the image in a tiled way.

Random shifts are applied to the image to blur tile boundaries over

multiple iterations.'''

sz = tile_size

h, w = img.shape[:2]

sx, sy = np.random.randint(sz, size=2)

img_shift = np.roll(np.roll(img, sx, 1), sy, 0)

grad = np.zeros_like(img)

for y in range(0, max(h-sz//2, sz),sz):

for x in range(0, max(w-sz//2, sz),sz):

sub = img_shift[y:y+sz,x:x+sz]

g = sess.run(t_grad, {t_input:sub})

grad[y:y+sz,x:x+sz] = g

return np.roll(np.roll(grad, -sx, 1), -sy, 0)

def render_deepdream(t_obj,iter_n, img0=img_noise,

step=1.5, octave_n=5, octave_scale=1.4):

t_score = tf.reduce_mean(t_obj) # defining the optimization objective

t_grad = tf.gradients(t_score, t_input)[0] # behold the power of automatic differentiation!

# split the image into a number of octaves

img = img0

octaves = []

for _ in range(octave_n-1):

hw = img.shape[:2]

lo = resize(img, np.int32(np.float32(hw)/octave_scale))

hi = img-resize(lo, hw)

img = lo

octaves.append(hi)

# generate details octave by octave

for octave in range(octave_n):

if octave>0:

hi = octaves[-octave]

img = resize(img, hi.shape[:2])+hi

for _ in range(iter_n):

g = calc_grad_tiled(img, t_grad)

img += g*(step / (np.abs(g).mean()+1e-7))

showarray(img/255.0)

# Picking some internal layer. Note that we use outputs before applying the ReLU nonlinearity

# to have non-zero gradients for features with negative initial activations.

layer = 'mixed4d_3x3_bottleneck_pre_relu'

k = np.float32([1,4,6,4,1])

k = np.outer(k, k)

k5x5 = k[:,:,None,None]/k.sum()*np.eye(3, dtype=np.float32)

img0 = PIL.Image.open('dragon.jpg')

img0 = np.float32(img0)

showarray(img0/255.0)

for i in range (0, 40):

#render_deepdream(tf.square(T('mixed4e')), i, img0) #or use layer variable above

#channel 139

render_deepdream(tf.square(T(layer)[:,:,:,139]), i, img0)

if __name__ == '__main__':

main()

Here are the settings

Thai Salad

layer = ‘mixed5b_pool_reduce_pre_relu’

render_deepdream(tf.square(T(layer)[:,:,:,100]), i, img0)

Forest

layer = ‘mixed4c_pool_reduce’

render_deepdream(tf.square(T(layer)[:,:,:,61]), i, img0)

Haunted House

layer = ‘mixed3a’

render_deepdream(tf.square(T(layer)[:,:,:,120]), i, img0)

Dragon

render_deepdream(tf.square(T(‘mixed4e’)), i, img0)

‘mixed4e’

Here is the list of layers in the graph

{layer: ‘autostripe mixed3a_5x5’, channel: 9},

{layer: ‘conv2d0_pre_relu’, channel: 26},

{layer: ‘conv2d1’, channel: 42},

{layer: ‘conv2d1_pre_relu’, channel: 4242},

{layer: ‘head0_bottleneck’, channel: 6},

{layer: ‘head1_bottleneck_pre_relu’, channel: 4242},

{layer: ‘land mixed4d_3x3’, channel: 63},

{layer: ‘maxpool1’, channel: 4242},

{layer: ‘mixed3a’, channel: 120},

{layer: ‘mixed3a’, channel: 43},

{layer: ‘mixed3a_3x3’, channel: 4242},

{layer: ‘mixed3a_3x3’, channel: 77},

{layer: ‘mixed3a_3x3_pre_relu’, channel: 4242},

{layer: ‘mixed3a_5x5’, channel: 20},

{layer: ‘mixed3a_5x5_bottleneck_pre_relu’, channel: 2}, // swirly things, muted colors

{layer: ‘mixed3a_pool_reduce’, channel: 13},

{layer: ‘mixed3b’, channel: 4242},

{layer: ‘mixed3b_1x1_pre_relu’, channel: 65},

{layer: ‘mixed3b_3x3’, channel: 144},

{layer: ‘mixed3b_5x5_pre_relu’, channel: 10},

{layer: ‘mixed3b_pool’, channel: 34},

{layer: ‘mixed4a_3x3_bottleneck_pre_relu’, channel: 51},

{layer: ‘mixed4a_pool’, channel: 192},

{layer: ‘mixed4a_pool’, channel: 280},

{layer: ‘mixed4a_pool_reduce’, channel: 4242},

{layer: ‘mixed4a_pool_reduce’, channel: 8},

{layer: ‘mixed4b_1x1’, channel: 37},

{layer: ‘mixed4b_1x1’, channel: 46},

{layer: ‘mixed4b_3x3_bottleneck’, channel: 22},

{layer: ‘mixed4b_3x3_bottleneck_pre_relu’, channel: 53},

{layer: ‘mixed4b_5x5_bottleneck’, channel: 18},

{layer: ‘mixed4b_pool’, channel: 4242},

{layer: ‘mixed4c’, channel: 126},

{layer: ‘mixed4c_1x1_pre_relu’, channel: 4242},

{layer: ‘mixed4c_3x3’, channel: 163},

{layer: ‘mixed4c_3x3_bottleneck’, channel: 4242},

{layer: ‘mixed4c_5x5_bottleneck’, channel: 4242},

{layer: ‘mixed4c_pool_reduce’, channel: 61},

{layer: ‘mixed4d_3x3’, channel: 4242},

{layer: ‘mixed4d_3x3_bottleneck_pre_relu’, channel: 139}, // flowers

{layer: ‘mixed4d_3x3_bottleneck_pre_relu’, channel: 139}, // flowers again because nice

{layer: ‘mixed4d_3x3_bottleneck_pre_relu’, channel: 4242},

{layer: ‘mixed4d_5x5_bottleneck’, channel: 31},

{layer: ‘mixed4d_5x5_bottleneck’, channel: 4242},

{layer: ‘mixed4d_5x5_bottleneck_pre_relu’, channel: 28},

{layer: ‘mixed4d_pool_reduce_pre_relu’, channel: 5},

{layer: ‘mixed4e’, channel: 101},

{layer: ‘mixed4e’, channel: 255},

{layer: ‘mixed4e’, channel: 4242},

{layer: ‘mixed4e’, channel: 528},

{layer: ‘mixed4e_3x3_bottleneck_pre_relu’, channel: 46},

{layer: ‘mixed4e_3x3_bottleneck_pre_relu’, channel: 68},

{layer: ‘mixed4e_5x5_bottleneck’, channel: 1},

{layer: ‘mixed4e_5x5_bottleneck’, channel: 4242},

{layer: ‘mixed4e_5x5_bottleneck_pre_relu’, channel: 27},

{layer: ‘mixed4e_pool_reduce_pre_relu’, channel: 120}, // pipe eyes

{layer: ‘mixed4e_pool_reduce_pre_relu’, channel: 40}, // blotchy snakes

{layer: ‘mixed4e_pool_reduce_pre_relu’, channel: 41}, // blotchy snakes

{layer: ‘mixed5a_3x3’, channel: 93},

{layer: ‘mixed5a_3x3_bottleneck_pre_relu’, channel: 90},

{layer: ‘mixed5a_5x5_bottleneck_pre_relu’, channel: 37},

{layer: ‘mixed5b’, channel: 4242},

{layer: ‘mixed5b_1x1_pre_relu’, channel: 4242},

{layer: ‘mixed5b_pool_reduce_pre_relu’, channel: 100}, // birds and random stuff

{layer: ‘mixed5b_pool_reduce_pre_relu’, channel: 140}, // parrot mess

{layer: ‘patterns mixed3a_3x3’, channel: 4242},