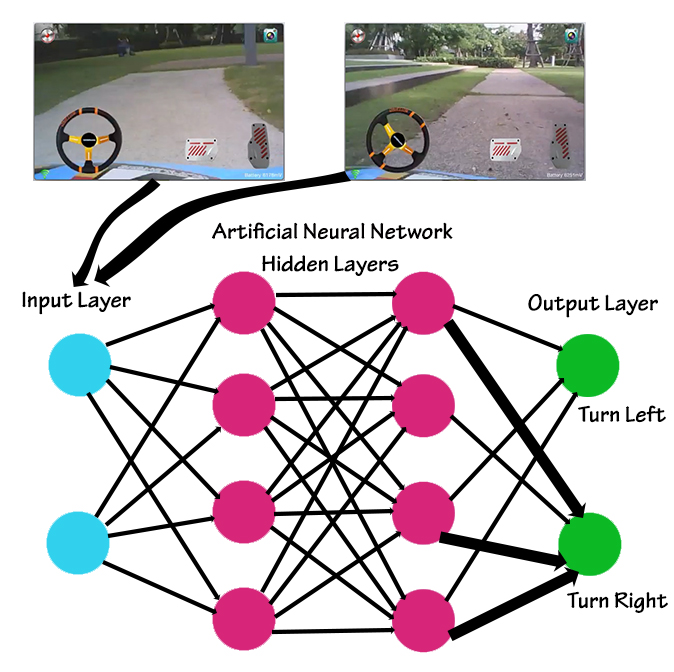

A while ago I considered implementing an Artificial Intelligence (AI) agent in an electric ride on car App so that the car could follow a path or other pre determined route and or follow someone that was walking. I started developing this using an Artificial Neural Network (ANN). The video stream from the camera on the car is fed to the ANN, it then decides, based on previous training, whether to turn left or right and or stop, move forwards or move backwards. I had the basic functionality working where the car could follow a flat path some of the time, it wasn’t great, didn’t work all the time and sometimes made some weird decisions like trying to run me over. It became obvious it needed a lot more hardware and software development. At a minimum it would need a stereo camera arrangement ( two eyes) as well as a number of radars. The end product would have been pretty expensive and the ability for it to drive itself from point A to point B or follow someone around would have been novel but ultimately probably a massive waste of time. So the decision was made to shelve the project for the time being, interesting exercise nevertheless.

While researching various aspects of this project, I came across some really fascinating developments and advances in AI which I thought would be worthwhile sharing. AI is a massive and broad subject and in the following discussion I’ll only be touching on a few key concepts. Even if your not that interested in technology I think you’ll still find this interesting.

There are a few types of AI I’ll cover

- Artificial Narrow Intelligence (ANI) also known as Weak AI

- Artificial General Intelligence (AGI) also known as Strong AI

- Artificial Super Intelligence (ASI)

Some examples of ANI are the electric ride on car ANN project outlined above, speech recognition, handwriting recognition, Deep Mind’s Atari player (1) and Google’s Inceptionism (2). The defining characteristic is the Intelligent Agent is focused on a very specific, narrow task. There is an ANI that can soundly beat you at chess, but thats all it can do, ask it about the price of eggs in China and it would be absolutely unresponsive.

AGI does not exist yet, so it is a hypothetical agent, that would be sentient and at least as smart as a human. So this means, that among other things, it would be able to learn, communicate, plan, reason and be capable of abstract thought. One of my all time favourite hobbies is to imagine myself in situations that will literally never exist, so I can’t help but wonder what this intelligent agent that could process information at the speed of light would think about and imagine? To me the most fascinating aspect of AGI is the concept of Recursive Self Improvement, which could give rise to ASI.

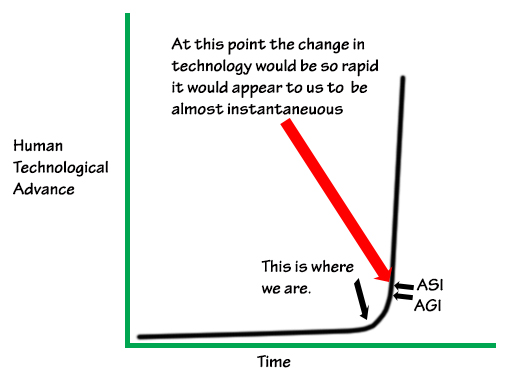

Once AGI is developed ASI that “greatly exceeds the cognitive performance of humans in virtually all domains of interest” would follow surprisingly quickly, possibly even instantaneously. Such a superintelligence would be difficult to control or restrain. ASI could replace humans as the dominant lifeforms on Earth. Sufficiently intelligent machines could improve their own capabilities faster than human computer scientists. As the fate of the gorillas now depends more on humans than on the actions of the gorillas themselves, so would the fate of humanity depend on the actions of the machine superintelligence. The outcome could be an existential catastrophe. (3)

Electrochemical signals travel through the brain at ~100m/s. Electron flow through a circuit or processor is close to the speed of light at ~299 792 458m/s, so an artificial neuron can operate millions of times faster than a human neuron. The Singularity Hypothesis proposes that an advanced machine intelligence could create a smarter version of itself through Recursive Self Improvement. Then this improved version creates a yet smarter version again, the result would be an intelligence explosion that we would barely be able to comprehend. The advent of super intelligence would represent a profound and disruptive transformation to our civilisation.

To help better explain what Recursive Self Improvement means, imagine if you could directly manipulate your own DNA at a cellular level and alter gene expression and phenotype. For example you could erase any chance of disease, you could change your hair colour from one day to the next and otherwise make alterations as desired You could make your brain bigger, you could alter you muscle structure etc etc. You could bring about any change that you could imagine, within the bounds of physics. Now try to imagine what a sentient superintelligence that can process information at the speed of light would come up with.

“Superintelligence would be the last invention biological man would ever need to make, since, by definition, it would be much better at inventing than we are. All sorts of theoretically possible technologies could be developed quickly by superintelligence — advanced molecular manufacturing, medical nanotechnology, human enhancement technologies, uploading, weapons of all kinds, lifelike virtual realities, self‐replicating space colonising robotic probes, and more. It would also be super‐effective at creating plans and strategies, working out philosophical problems, persuading and manipulating, and much else beside.” (4)

To help come to grips with this and put it in some kind of perspective we should discuss ANI first. The basis for just about all ANI is the ANN, the most succinct definition of a ANN I found is given by Dr Robert Hecht Nielsen “… a computing system made up of a number of simple highly integrated processing elements which process information by their dynamic state response to external inputs” (5)

ANN’s are a processing model inspired by the structure of the human brain. They are organised in layers where each layer consists of interconnected nodes that are a mathematical function or algorithm. Information or patterns are presented to the network via the Input layer which communicates to hidden layers, where the processing is done via weighted connections. The hidden layers then link to an output layer. In the WiWheels App we have ~ 17,100 neurons in the input layer which is one neuron per pixel for a video stream size of 107 x 160 pixels, a bunch in the hidden layer and 4 in the output layer, one each for backwards, forwards, left and right.

Figure 1.0 Example of an ANN, here each circle represents an artificial neuron and each arrow represents a connection. Each connection is weighted based on pixel values from the image fed to the input layer compared to the set of images and instructions that was used to train the ANN.

Neural Networks are adaptive and capable of learning. For example with the ANN we were developing for WiWheels we trained the network by recording video and simultaneously the instructions we were giving to the car i.e turn left, right, backwards, forwards. So it learned, for example when the car is on the left side of the path and the path continues it should turn right while continuing forward.

Another good example is the ANN that Deep Mind/ Google developed to play Atari games. (6)

They created an intelligent agent that receiving only the pixels (game screen) and the game score as inputs was able to teach itself how to play, that is, it learned how to play various Atari games through experience alone. It far surpassed the performance of all previous algorithms and achieved a level comparable to professional human gamers across 49 different games.

They have created the first artificial agent that is capable of learning to excel at a diverse array of challenging tasks. Pretty exciting development.

We haven’t even touched on the math involved in ANN’s, which for me was pretty daunting, if you want to get into it more deeply check out the Stanford Machine Learning Course by Andrew Ng, this course is free and available on line. (7)

So how do we go from ANI to AGI, well it will be a leap and quiet simply incredibly difficult. There are two aspects to the development of AGI, the software (code/instructions) and the hardware (processors /memory).

One approach that is being pursued to create the software, which I think of as an astonishingly complex mathematical model, is to reverse engineer the brain. To do this you need to look inside the brain to understand how it works, a number of new scanning technologies have emerged that are very powerful and progress is being made on that front. Researchers are also accumulating data on the precise function of the various components and systems of the brain from individual synapses to larger regions such as the cerebellum. It will be some time before we understand our own brain or how to simulate it or how to create a mathematical model of it, the human brain is the most complex thing in the universe. Based on what I have read I think creating the software for AGI will be the most challenging aspect.

As an aside a lot of the information I have used here comes from Ray Kurzweil’s book The Singularity is Near.

In this book he uses a fairly rudimentary method for approximating the computational capacity of the brain in Calculations Per Second (CPS). He looked at various research on different parts of the brain that estimated the capacity in CPS for a particular component. He then compared the weight of that component to the weight of the whole brain and multiplied. He did this for a number of different components from a number of different researchers and always arrived at around 1016 CPS. So I think we can accept that as a reasonable estimation, even if it is orders of magnitude out it won’t really matter much, there is already a computer that is capable of this number. The worlds fastest computer is China’s Tihane-2 which has been clocked 3416 - 5516 CPS, but it is huge taking up 720m2 floor space, drinking 17.6 megawatts of power and it cost $400 million.

Ray Kurzweil suggests we think about processing power as the number of CPS you can get for $1000. He wrote The Singularity is Near in 2005 when you could get 1010 CPS / $1000 and predicted 1016 CPS / $1000 by 2025. Its 2015 and his prediction is holding true, right now you can buy 1012 CPS / $1000. Whether we get to 1016 CPS/$1000 with silicon based processors remains to be seen.

The human brain uses pretty inefficient electrochemical signals, our brain gets its prodigious power from its massively parallel organisation in three dimensions. Carbon Nanotubes are one emerging three dimensional molecular computing technology. Nanotubes consist of a hexagonal network of carbon atoms that have been rolled into a cylinder. They measure only a few atoms across and because electrons move through a circuit at ~ the speed of light the distance they have to travel determines the processing speed. Because Nanotubes can be packed so closely together this type of processor is many 1000’s of times faster those based on silicon. Interestingly carbon is the fundamental building block for all biological organisms on earth because of its unique ability to form 4 covalent bonds.

In his book Ray Kurzweil spends a lot of time discussing the exponential rate of change in technology and human technological development and the law of accelerating returns. He has predicted the advent of AGI by 2045. If we were to try and summarise all the data he presents in is book it might look something like this.

All indications are an intelligence explosion is only a matter of time. It will be mankinds greatest achievement or represent an existential threat. There probably won’t be any in between. There is a lot of discussion about what controls and architectures can be used on the road to AGI. Considering the difficulty of looking beyond the, let’s call it the event horizon, and imagining what ASI would be capable of it’s difficult to imagine any form of human construct that could be used to limit or control an ASI.

Elon Musk, Stephen Hawking and Bill Gates among others have come out and warned of the potential consequences of AGI, Elon Musk even went so far as to buy shares in Deep Mind, the most prominent organisation in AI research, now owned by Google. According to an article I read he didn’t do this for profit but rather just to be able to keep an eye on them.

If you want to glimpse what the future might hold you should check out Ex Machina the best movie I have seen in a long time. by Alex Garland, the same guy that wrote The Beach, 28 Days Later, and Sunshine.

I’ve only just touched on this subject there are a lot of aspects we didn’t get into and some we just glossed over, there is a lot of information out there.

I’ll leave you with a quote from Ray Kurzweil “I set the date for the Singularity—representing a profound and disruptive transformation in human capability—as 2045. Despite the clear predominance of nonbiological intelligence by the mid-2040s, ours will still be a human civilisation. We will transcend biology, but not our humanity.”

References

- https://www.cs.toronto.edu/~vmnih/docs/dqn.pdf

- http://googleresearch.blogspot.com/2015/06/inceptionism-going-deeper-into-neural.html

- https://en.wikipedia.org/wiki/Superintelligence:_Paths,_Dangers,_Strategies

- http://www.nickbostrom.com/views/superintelligence.pdf

- http://pages.cs.wisc.edu/~bolo/shipyard/neural/local.html

- https://www.coursera.org/learn/machine-learning/home/info

- https://en.wikipedia.org/wiki/The_Singularity_Is_Near

I saw deepminds Alpha Go beat the world go champion a few months ago, deeply impressive and a bit scary.

I saw deepminds Alpha Go beat the world go champion a few months ago, deeply impressive and a bit scary.